A study on adversarial attacks in Deep Learning-based traffic signal recognition for autonomous vehicles

Keywords:

Adversarial attack, Autonomous vehicle, Traffic light detection, Image classification, ImageNet, Inception-v3Abstract

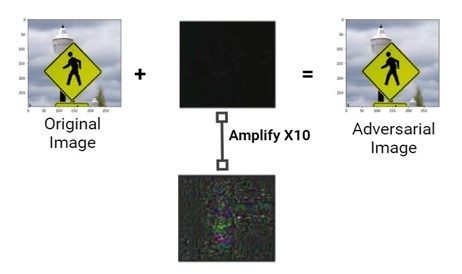

Autonomous vehicles are gradually occupying the streets and are expected to become ubiquitous in the near future. However, recent incidents involving these vehicles have raised serious concerns about their safety, particularly regarding the reliability of their onboard machine learning systems. In this paper, we expose a critical yet underexplored vulnerability—misclassifying street signs as traffic lights—by conducting a targeted white-box adversarial attack. To the best of our knowledge, this specific vulnerability has not been addressed in the existing literature. We craft adversarial examples using the Fast Gradient Sign Method (FGSM) to generate minimal perturbations that can deceive a state-of-the-art image classification model, Inception-V3, trained on the ImageNet dataset. We also introduce a custom dataset consisting of real-world street sign and traffic light images to test the attack under more domain-specific conditions. Our evaluation metrics include attack success rate, Structural Similarity Index (SSIM), and L2 distance, with our method achieving a 100% success rate in misclassification. These results highlight the pressing need to design robust defenses against adversarial attacks in safety-critical systems. We further discuss technical challenges, potential defenses such as adversarial training and obfuscated gradients, and directions for future research to enhance the resilience of deep learning systems in autonomous vehicles.

References

C. Sitawarin, A. N. Bhagoji, A. Mosenia, M. Chiang, and P. Mittal, “DARTS: deceiving autonomous cars with toxic signs,” CoRR, vol. abs/1802.06430, 2018. [Online]. Available: http://arxiv.org/abs/1802.06430

K. Eykholt, I. Evtimov, E. Fernandes, B. Li, A. Rahmati, C. Xiao, A. Prakash, T. Kohno, and D. X. Song, “Robust physicalworld attacks on deep learning visual classification,” 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1625–1634, 2018.

C. Szegedy, V. Vanhoucke, S. Ioffe, J. Shlens, and Z. Wojna, “Rethinking the inception architecture for computer vision,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 2818–2826.

F. Lindner, U. Kressel, and S. Kaelberer, “Robust recognition of traffic signals,” in IEEE Intelligent Vehicles Symposium, 2004, June 2004, pp. 49–53.

U. Franke, D. Gavrila, S. Gorzig, F. Lindner, F. Puetzold, and C. Wohler, “Autonomous driving goes downtown,” IEEE Intelligent Systems and their Applications, vol. 13, no. 6, pp. 40–48, Nov 1998.

K. S. Athrey, B. M. Kambalur, and K. K. Kumar, “Traffic sign recognition using blob analysis and template matching,” in Proceedings of the Sixth International Conference on Computer and Communication Technology 2015, ser. ICCCT ’15. New York, NY, USA: ACM, 2015, pp. 219–222. [Online]. Available: http://doi.acm.org.ezproxy.lib.vt.edu/10.1145/2818567.2818609

M. Omachi and S. Omachi, “Traffic light detection with color and edge information,” in 2009 2nd IEEE International Conference on Computer Science and Information Technology. IEEE, 2009, pp. 284–287.

M. B. Jensen, M. P. Philipsen, C. Bahnsen, A. Møgelmose, T. B. Moeslund, and M. M. Trivedi, “Traffic light detection at night: Comparison of a learning-based detector and three model-based detectors,” in Advances in Visual Computing, G. Bebis, R. Boyle, B. Parvin, D. Koracin, I. Pavlidis, R. Feris, T. McGraw, M. Elendt, R. Kopper, E. Ragan, Z. Ye, and G. Weber, Eds. Cham: Springer International Publishing, 2015, pp. 774–783.

M. P. Philipsen, M. B. Jensen, A. Møgelmose, T. B. Moeslund, and M. M. Trivedi, “Traffic light detection: A learning algorithm and evaluations on challenging dataset,” in 2015 IEEE 18th International Conference on Intelligent Transportation Systems, Sep. 2015, pp. 2341–2345.

J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2016, pp. 779–788.

P. Sermanet and Y. LeCun, “Traffic sign recognition with multi-scale convolutional networks,” in The 2011 International Joint Conference on Neural Networks, July 2011, pp. 2809–2813.

Y. Yang, H. Luo, H. Xu, and F. Wu, “Towards real-time traffic sign detection and classification,” IEEE Transactions on Intelligent Transportation Systems, vol. 17, no. 7, pp. 2022–2031, July 2016.

A. Pon, O. Adrienko, A. Harakeh, and S. L. Waslander, “A hierarchical deep architecture and mini-batch selection method for joint traffic sign and light detection,” in 2018 15th Conference on Computer and Robot Vision (CRV), May 2018, pp. 102–109.

C. Szegedy, W. Zaremba, I. Sutskever, J. Bruna, D. Erhan, I. J. Goodfellow, and R. Fergus, “Intriguing properties of neural networks,” CoRR, vol. abs/1312.6199, 2013. [Online]. Available: http://arxiv.org/abs/1312.6199

C. Sitawarin, A. N. Bhagoji, A. Mosenia, P. Mittal, and M. Chiang, “Rogue signs: Deceiving traffic sign recognition with malicious ads and logos,” CoRR, vol. abs/1801.02780, 2018. [Online]. Available: http://arxiv.org/abs/1801.02780

J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “Imagenet: A large-scale hierarchical image database,” in 2009 IEEE conference on computer vision and pattern recognition. Ieee, 2009, pp. 248–255.

K.-H. Chow, L. Liu, M. Loper, J. Bae, M. E. Gursoy, S. Truex, W. Wei, and Y. Wu, “Adversarial objectness gradient attacks in real-time object detection systems,” in 2020 Second IEEE International Conference on Trust, Privacy and Security in Intelligent Systems and Applications (TPS-ISA). IEEE, 2020, pp. 263–272.

B. Aksoy and A. Temizel, “Attack type agnostic perceptual enhancement of adversarial images,” arXiv preprint arXiv:1903.03029, 2019

N. Carlini and D. Wagner, “Towards evaluating the robustness of neural networks,” in 2017 IEEE Symposium on Security and Privacy (SP). IEEE, 2017, pp. 39–57.

Z. Wang, “The ssim index for image quality assessment,” https://ece. uwaterloo. ca/˜ z70wang/research/ssim, 2003.

L. Baccour and R. I. John, “Experimental analysis of crisp similarity and distance measures,” in 2014 6th International Conference of Soft Computing and Pattern Recognition (SoCPaR). IEEE, 2014, pp. 96–100.